Learning dexterity from human demonstrations

Published on:

25 April 2024

Primary Category:

Robotics

Paper Authors:

Toru Lin,

Yu Zhang,

Qiyang Li,

Haozhi Qi,

Brent Yi,

Sergey Levine,

Jitendra Malik

Bullets

Key Details

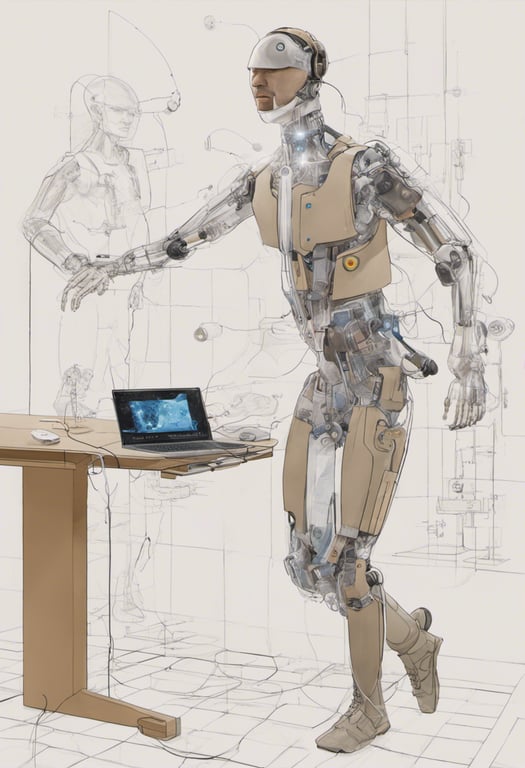

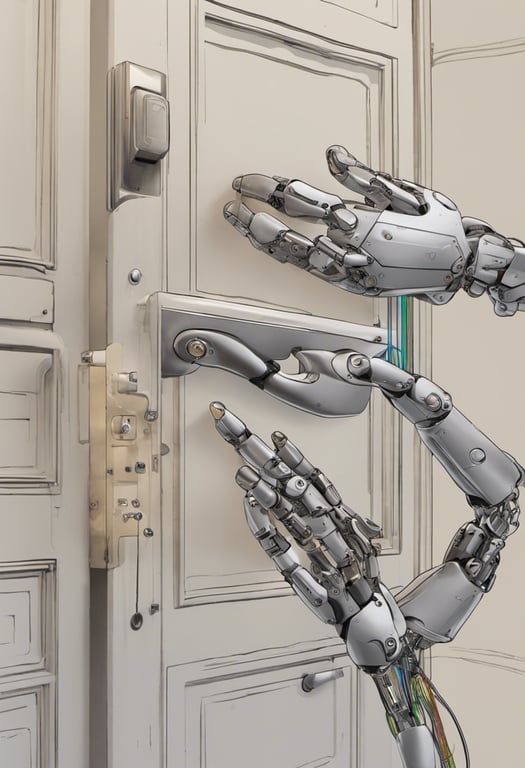

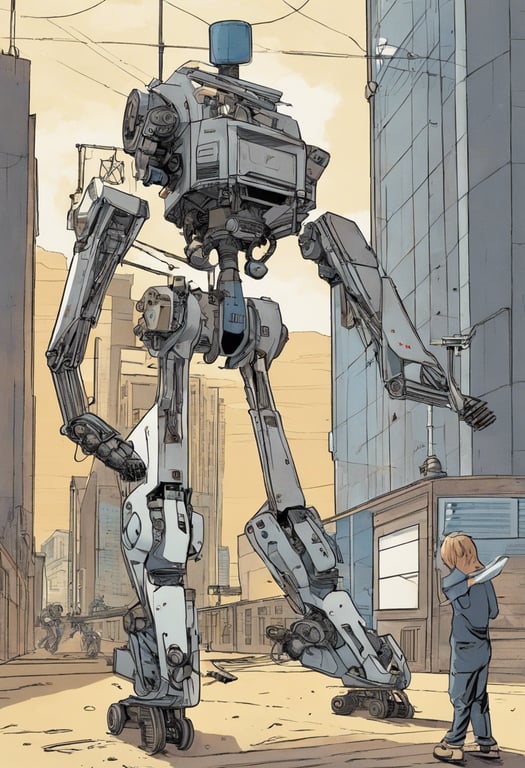

Develop HATO system for efficient bimanual teleoperation data collection

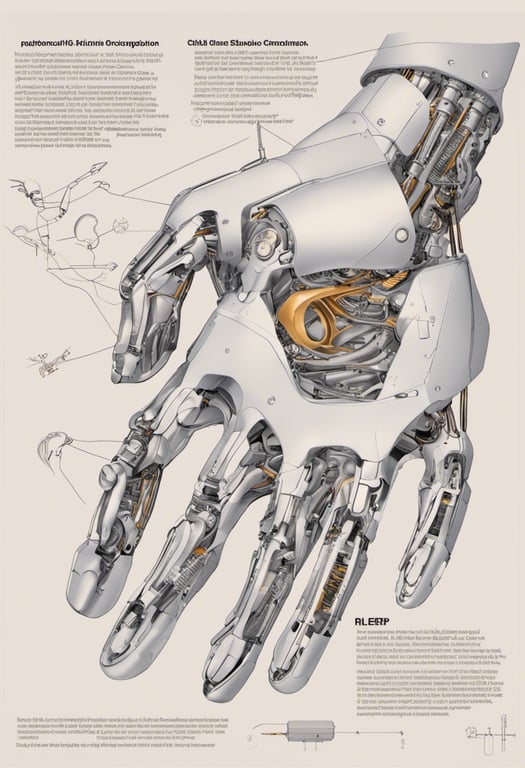

Adapt prosthetic hands to provide rich touch sensing capabilities

Showcase unprecedented bimanual dexterity learned from human demos

Empirically study effects of data size and sensing modalities

Vision + touch crucial for completing tasks robustly

Explore the topics in this paper

AI generated summary

Learning dexterity from human demonstrations

The authors develop HATO, a low-cost bimanual teleoperation system using off-the-shelf virtual reality hardware and prosthetic hands equipped with touch sensors. They collect visuotactile demonstration data of complex manipulation tasks and use it to train policies that successfully replicate human dexterity, motion patterns and perceptual experiences. Key results show vision and touch are critical for policy success, and performance saturates at 100-300 demos.

Answers from this paper

You might also like

Teleoperation with haptic feedback using low-cost tactile sensors

Teleoperation system for robot control

Assistive prosthesis system for blind amputees to grasp objects

Learning robot manipulation skills from human demonstrations and tactile sensing

Cutaneous feedback for dexterous teleoperation

Teleoperation system for mobile robot control

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper