Learning robot manipulation skills from human demonstrations and tactile sensing

Paper Title:

Push it to the Demonstrated Limit: Multimodal Visuotactile Imitation Learning with Force Matching

Published on:

2 November 2023

Primary Category:

Robotics

Paper Authors:

Trevor Ablett,

Oliver Limoyo,

Adam Sigal,

Affan Jilani,

Jonathan Kelly,

Kaleem Siddiqi,

Francois Hogan,

Gregory Dudek

Bullets

Key Details

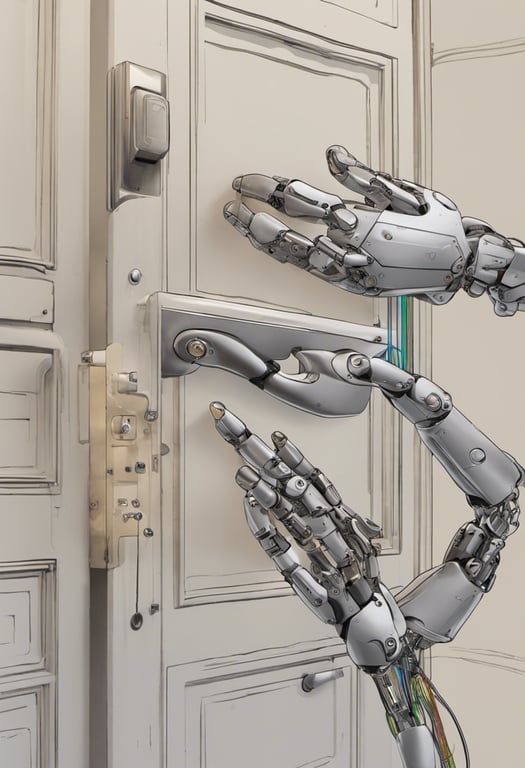

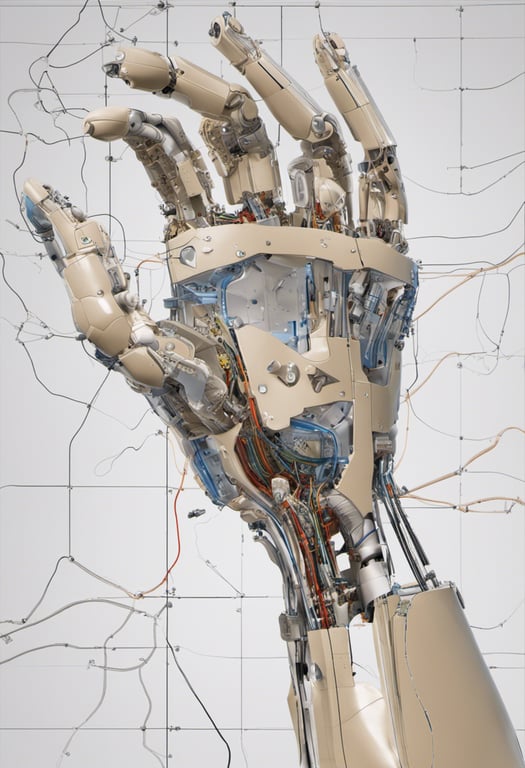

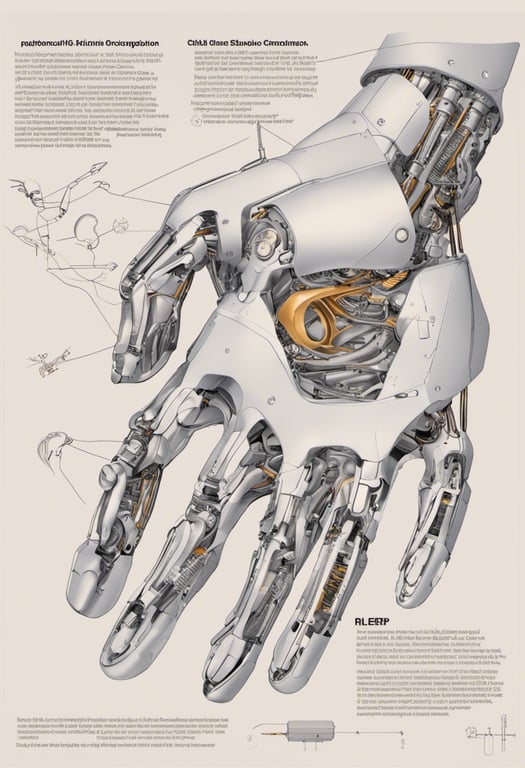

A multimodal tactile sensor is used that can switch between visual and tactile modes

During demonstrations, a novel method matches demonstrator forces

The policy learns when to switch the sensor mode as an action

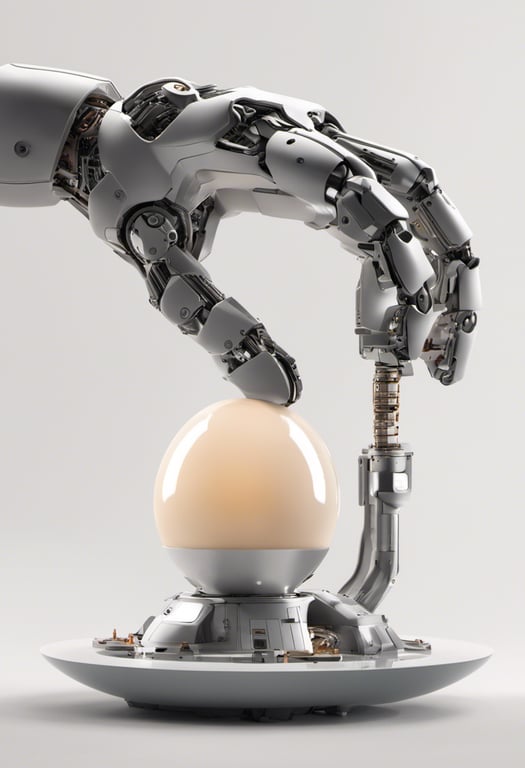

Experiments on real robot tasks show benefits of tactile sensing and force matching

Explore the topics in this paper

AI generated summary

Learning robot manipulation skills from human demonstrations and tactile sensing

This paper investigates using a multimodal tactile sensor for imitation learning of contact-rich manipulation skills on a real robot. The sensor has visual and tactile modes, and the authors present methods for matching demonstrator forces during data collection, and for learning when to switch sensor modes. Experiments on opening and closing cabinet doors show performance benefits from tactile sensing, force matching, and learned mode switching.

Answers from this paper

You might also like

Vision-based tactile sensing for multimodal contact information

Cutaneous feedback for dexterous teleoperation

Using vision and touch sensing to assess robotic grasp stability

Teleoperation with haptic feedback using low-cost tactile sensors

Predicting touch from vision for robot manipulation

Estimating touch from 3D scenes

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper