Monocular depth estimation challenge tests generalization

Paper Title:

The Third Monocular Depth Estimation Challenge

Published on:

25 April 2024

Primary Category:

Computer Vision and Pattern Recognition

Paper Authors:

Jaime Spencer,

Fabio Tosi,

Matteo Poggi,

Ripudaman Singh Arora,

Chris Russell,

Simon Hadfield,

Richard Bowden,

GuangYuan Zhou,

ZhengXin Li,

Qiang Rao,

YiPing Bao,

Xiao Liu,

Dohyeong Kim,

Jinseong Kim,

Myunghyun Kim,

Mykola Lavreniuk,

Rui Li,

Qing Mao,

Jiang Wu,

Yu Zhu,

Jinqiu Sun,

Yanning Zhang,

Suraj Patni,

Aradhye Agarwal,

Chetan Arora,

Pihai Sun,

Kui Jiang,

Gang Wu,

Jian Liu,

Xianming Liu,

Junjun Jiang,

Xidan Zhang,

Jianing Wei,

Fangjun Wang,

Zhiming Tan,

Jiabao Wang,

Albert Luginov,

Muhammad Shahzad,

Seyed Hosseini,

Aleksander Trajcevski,

James H. Elder

Bullets

Key Details

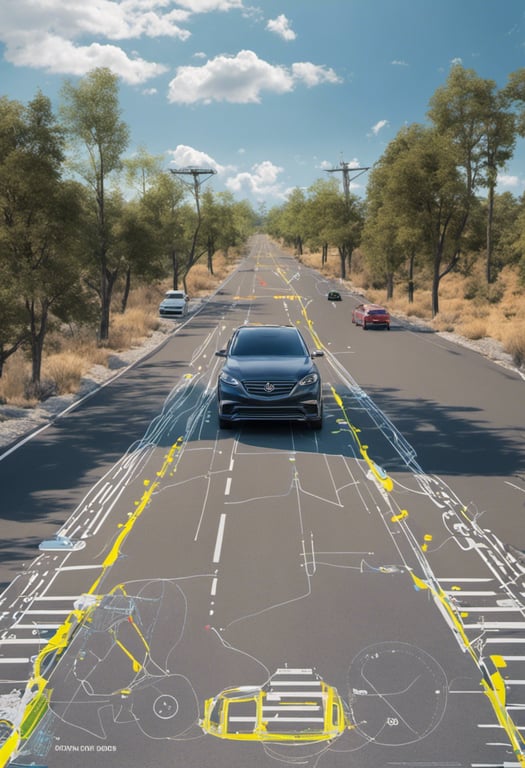

Challenge tests depth estimation generalization

19 submissions beat baseline performance

Most use Depth Anything model

Winning method gets 23.72% 3D F-Score

Explore the topics in this paper

AI generated summary

Monocular depth estimation challenge tests generalization

The paper summarizes the third Monocular Depth Estimation Challenge, which tested algorithms on their ability to generalize to complex natural and indoor scenes. 19 submissions outperformed the baseline method. 10 teams submitted reports, showing widespread use of Depth Anything model. The top method increased the 3D F-Score from 17.51% to 23.72%.

Answers from this paper

You might also like

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper