Generalized depth inference from RGB and sparse depth

Paper Title:

G2-MonoDepth: A General Framework of Generalized Depth Inference from Monocular RGB+X Data

Published on:

24 October 2023

Primary Category:

Computer Vision and Pattern Recognition

Paper Authors:

Haotian Wang,

Meng Yang,

Nanning Zheng

Bullets

Key Details

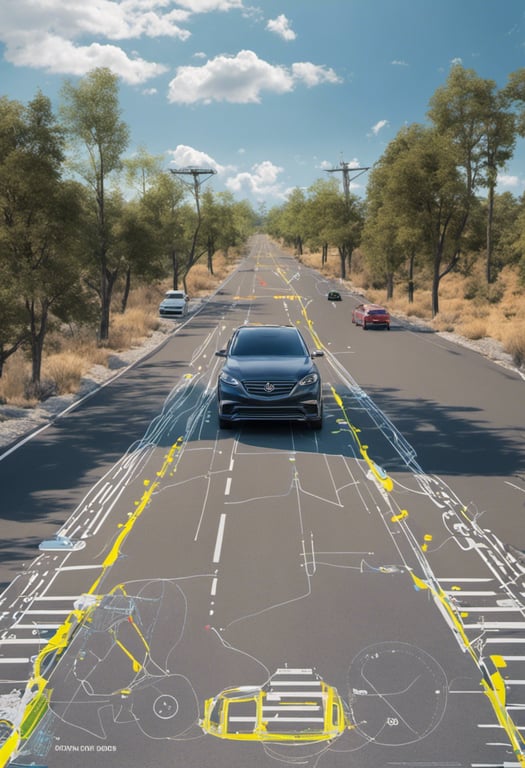

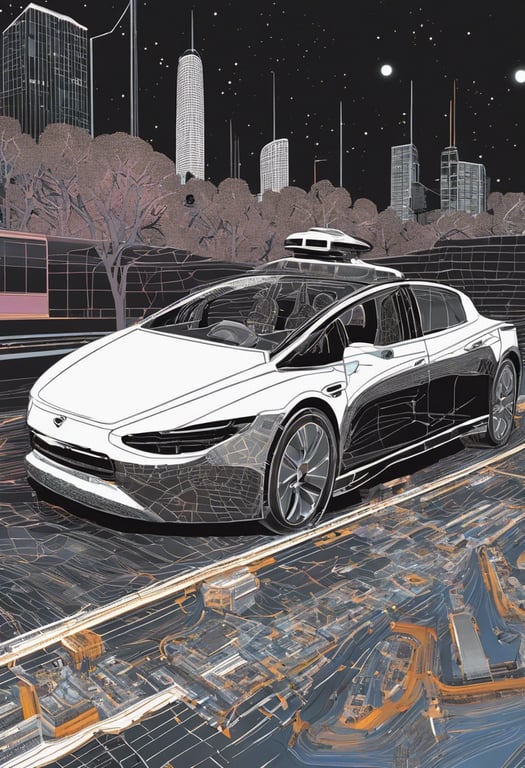

Investigates a unified task to infer depth from RGB and sparse depth in diverse scenes

Develops benchmark G2-MonoDepth with unified data representation, loss, network, and data pipeline

Unified loss adapts to input data sparsity/errors and output scene scales

ReZero U-Net propagates scene scales from input to output

Outperforms baselines in depth estimation, completion, and enhancement

Explore the topics in this paper

AI generated summary

Generalized depth inference from RGB and sparse depth

This paper investigates a unified task of monocular depth inference that infers high-quality depth maps from RGB images plus optional raw depth maps with varying scene scales, semantics, and depth sparsity/errors. A benchmark called G2-MonoDepth is developed with four components: (1) RGB+X data representation to accommodate diverse input data; (2) A novel unified loss to adapt to input data and output scenes; (3) An improved network ReZero U-Net to propagate scene scales; (4) A data augmentation pipeline. Experiments show G2-MonoDepth outperforms state-of-the-art baselines in depth estimation, completion, and enhancement.

Answers from this paper

You might also like

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper