Demystifying Monocular Depth Estimation with Synthetic Data

Published on:

2 May 2023

Primary Category:

Computer Vision and Pattern Recognition

Paper Authors:

Aakash Rajpal,

Noshaba Cheema,

Klaus Illgner-Fehns,

Philipp Slusallek,

Sunil Jaiswal

Bullets

Key Details

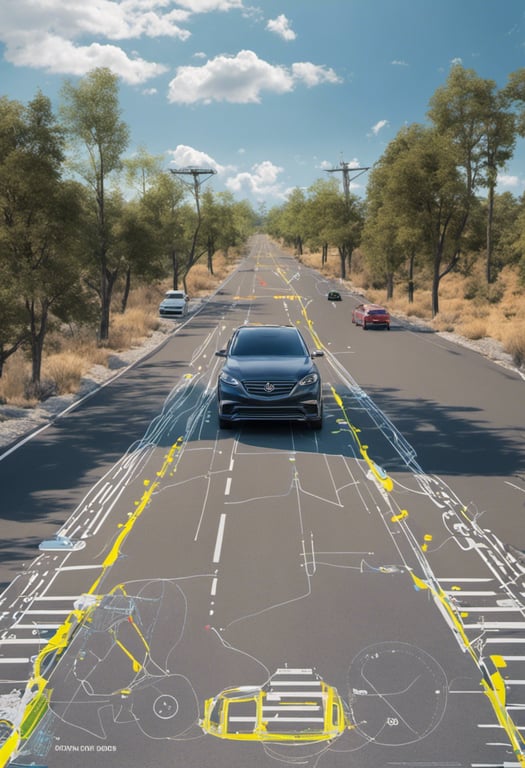

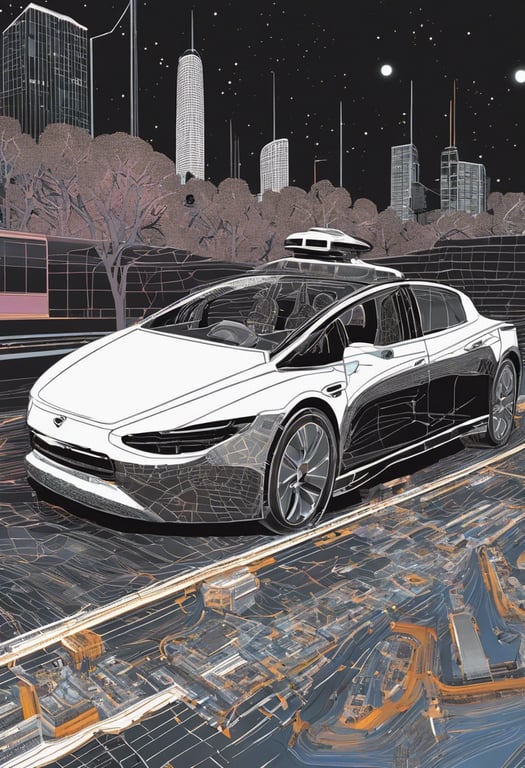

Generated 100K high-res (1920x1080) synthetic RGB-D pairs from Grand Theft Auto V game

Retrained depth estimation models like DPT on new dataset significantly boosts performance

Added feature extraction module and attention loss enables high-res depth prediction

Achieves state-of-the-art results on public datasets like NYU, KITTI after retraining

Proposed model outputs smooth, consistent depth maps on diverse scenes

Explore the topics in this paper

AI generated summary

Demystifying Monocular Depth Estimation with Synthetic Data

This paper introduces a new high-resolution synthetic dataset for training monocular depth estimation models. The dataset contains 100K image pairs captured in the Grand Theft Auto game, providing diverse indoor and outdoor scenes with precise depth maps. The authors demonstrate that retraining existing models on this data improves performance and generalizability. Key innovations include a feature extraction module to handle high-res images and an attention-based loss function.

Answers from this paper

You might also like

Using simulations and AI for monocular depth data

Road Surface Reconstruction for Autonomous Vehicles

Learning depth from video

Self-Supervised Monocular Depth Estimation Using Day Images

Depth: A Guide to Monocular Depth Estimation Using Self-Supervision

Generalized depth inference from RGB and sparse depth

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper