Universal LiDAR segmentation

Published on:

2 May 2024

Primary Category:

Computer Vision and Pattern Recognition

Paper Authors:

Youquan Liu,

Lingdong Kong,

Xiaoyang Wu,

Runnan Chen,

Xin Li,

Liang Pan,

Ziwei Liu,

Yuexin Ma

Bullets

Key Details

Combines multi-task, multi-dataset, and multi-modality data

Conducts alignments in data, feature, and label spaces

Achieves state-of-the-art segmentation performance

Uses a single set of parameters across diverse datasets

Demonstrates strong robustness and generalizability

Explore the topics in this paper

AI generated summary

Universal LiDAR segmentation

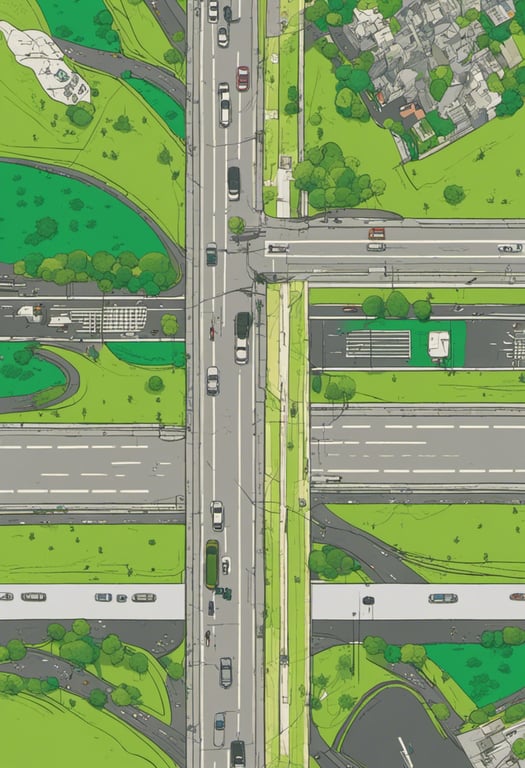

This paper presents M3Net, a framework for multi-task, multi-dataset, multi-modality LiDAR segmentation using a single set of parameters. It combines large-scale driving datasets with different sensors and conducts alignments in data, feature, and label spaces during training. This allows M3Net to train state-of-the-art segmentation models by taming heterogeneous data. Experiments on 12 datasets show it achieves top performance on SemanticKITTI, nuScenes, and Waymo using one shared parameter set.

Answers from this paper

You might also like

Multi-sensor road segmentation

Multimodal fusion for 3D object detection

Camera-based 3D object detection using multi-dataset training and transformer model

Data-efficient 3D scene understanding for autonomous vehicles

Point cloud semantic features for 3D object detection

Semantic LiDAR Odometry for Fast Moving Vehicles

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper