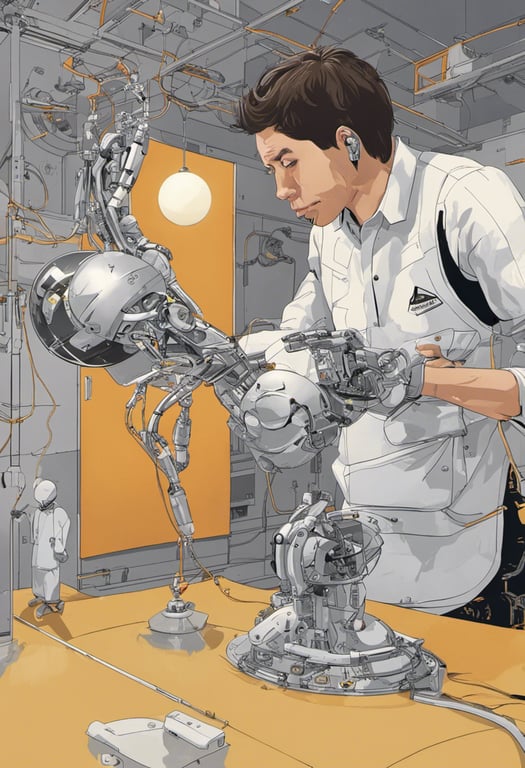

Predicting object motion from videos enables diverse robot manipulation

Paper Title:

Track2Act: Predicting Point Tracks from Internet Videos enables Diverse Zero-shot Robot Manipulation

Published on:

2 May 2024

Primary Category:

Robotics

Paper Authors:

Homanga Bharadhwaj,

Roozbeh Mottaghi,

Abhinav Gupta,

Shubham Tulsiani

Bullets

Key Details

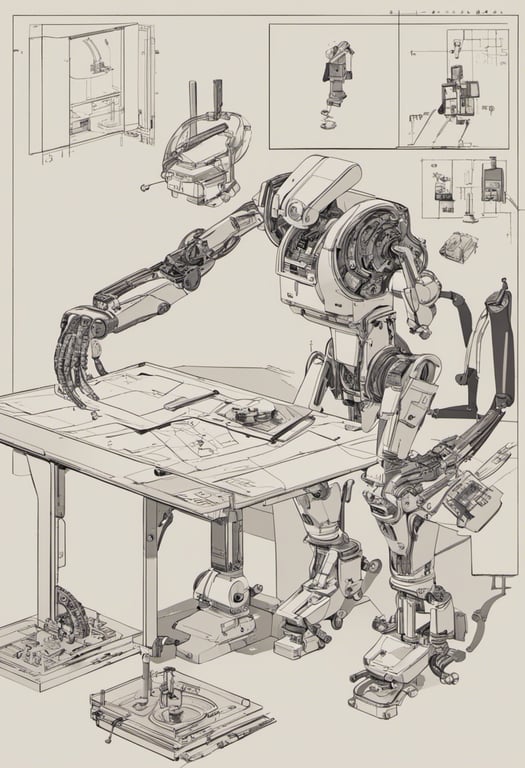

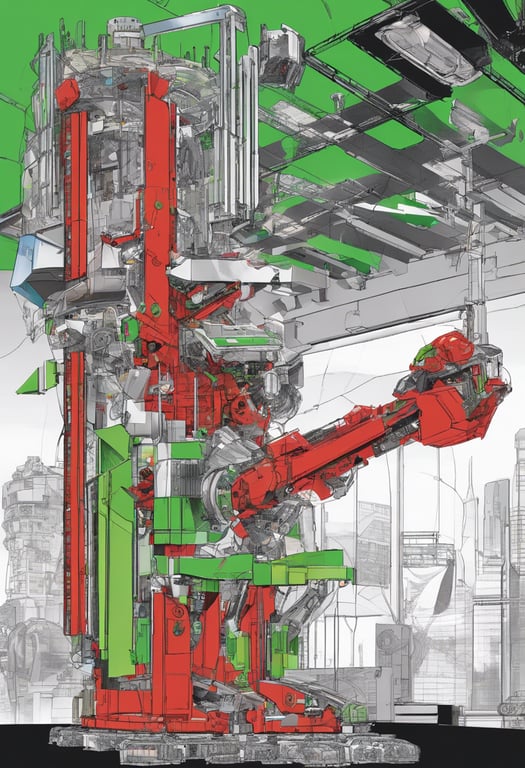

Proposes track prediction model to forecast object motion from web videos

Converts predicted 2D tracks to 3D robot manipulation plans

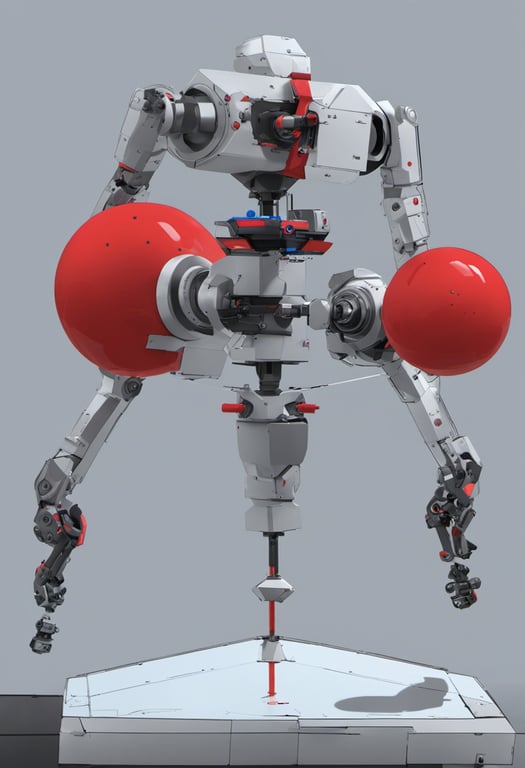

Combines scalable track predictions with small robot residual policy

Enables zero-shot robot manipulation in unseen scenarios

Shows real-world robot results across diverse tasks

Explore the topics in this paper

AI generated summary

Predicting object motion from videos enables diverse robot manipulation

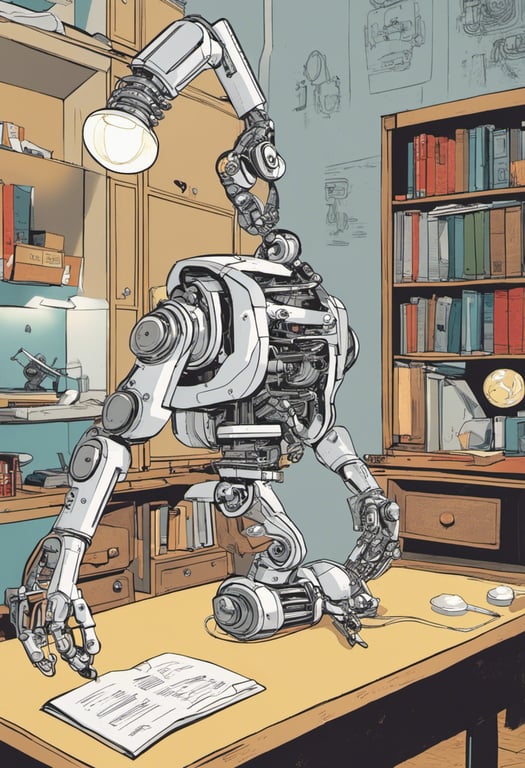

This paper proposes a method to predict how objects should move between an initial and goal scene configuration based on web videos. It then uses these predicted 'tracks' of object motion to generate robot manipulation plans that can successfully manipulate objects in new scenarios not seen during training. A small amount of robot-specific data further refines the open-loop plans into closed-loop policies.

Answers from this paper

You might also like

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper