Benchmark for long context language model understanding

Paper Title:

XL$^2$Bench: A Benchmark for Extremely Long Context Understanding with Long-range Dependencies

Published on:

8 April 2024

Primary Category:

Computation and Language

Paper Authors:

Xuanfan Ni,

Hengyi Cai,

Xiaochi Wei,

Shuaiqiang Wang,

Dawei Yin,

Piji Li

Bullets

Key Details

XL2Bench tests language models on 100K+ word/200K+ character texts

It has 27 subtasks across 3 scenarios and 4 tasks

Leading LLMs struggle with XL2Bench, lagging behind human performance

Data augmentation helps reduce benchmark contamination

There are still challenges for models to comprehensively understand long texts

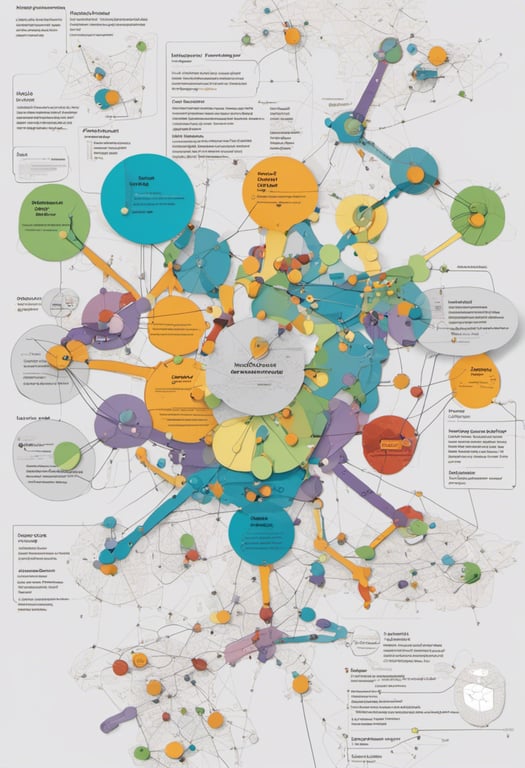

Explore the topics in this paper

AI generated summary

Benchmark for long context language model understanding

This paper introduces XL2Bench, a benchmark to evaluate language models' ability to understand very long texts (100K+ words English, 200K+ characters Chinese). It has 3 scenarios (fiction, papers, laws) and 4 tasks (memory retrieval, detailed understanding, overall understanding, open-ended generation) across 27 subtasks. Experiments on 6 leading LLMs show performance lags far behind humans, demonstrating issues handling long texts. The benchmark also uses data augmentation to mitigate contamination.

Answers from this paper

You might also like

Large language models struggle with long-range dependencies

Evaluating large language models on long text tasks

Evaluating misinformation in Chinese language models for maternity and infant care

Benchmarking Large Language Models for Knowledge Graph Tasks

Evaluating language models on contextual understanding

LLM memory performance

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper