Decoder-decoder architecture for efficient language models

Published on:

8 May 2024

Primary Category:

Computation and Language

Paper Authors:

Yutao Sun,

Li Dong,

Yi Zhu,

Shaohan Huang,

Wenhui Wang,

Shuming Ma,

Quanlu Zhang,

Jianyong Wang,

Furu Wei

Bullets

Key Details

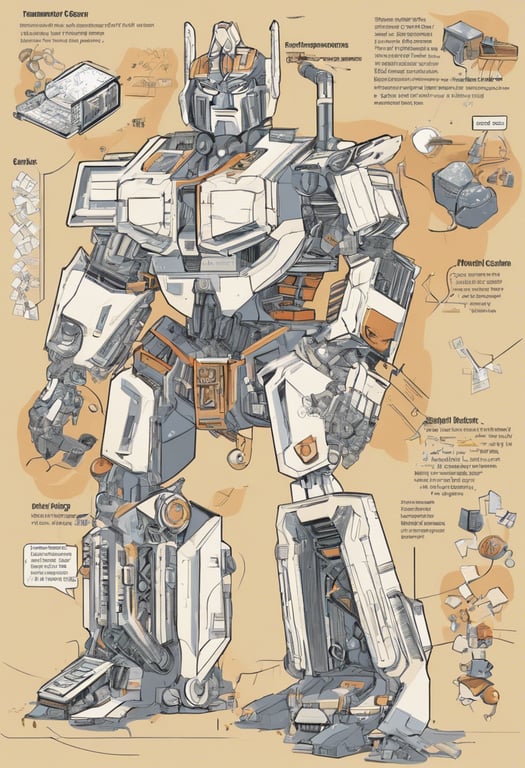

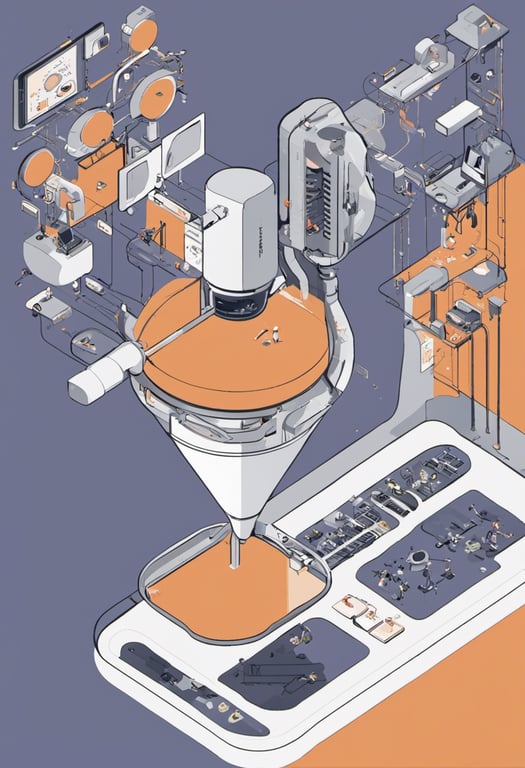

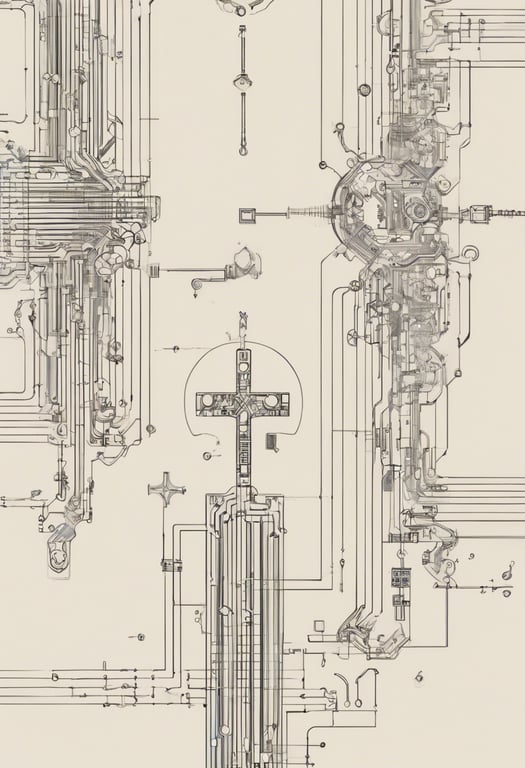

Proposes YOCO, a decoder-decoder architecture for efficient language models

Self-decoder encodes global key-value caches, cross-decoder reuses them

Reduces GPU memory for inference and speeds up prefilling stage

Scales well wrt model size, training data, and up to 1M context length

At 1M tokens, high accuracy on retrieval tasks

Explore the topics in this paper

AI generated summary

Decoder-decoder architecture for efficient language models

The paper proposes YOCO, a decoder-decoder architecture for large language models. It consists of a self-decoder that encodes global key-value caches, and a cross-decoder that reuses those caches. This design reduces GPU memory usage and speeds up inference compared to regular Transformer decoders. Experiments show YOCO scales well in terms of model size, training data, and context length. At 1 million tokens it achieves high accuracy on retrieval tasks. Profiling shows orders of magnitude less memory usage and faster prefilling.

Answers from this paper

You might also like

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper