Visual language models with deep vision-text fusion

Published on:

6 November 2023

Primary Category:

Computer Vision and Pattern Recognition

Paper Authors:

Weihan Wang,

Qingsong Lv,

Wenmeng Yu,

Wenyi Hong,

Ji Qi,

Yan Wang,

Junhui Ji,

Zhuoyi Yang,

Lei Zhao,

Xixuan Song,

Jiazheng Xu,

Bin Xu,

Juanzi Li,

Yuxiao Dong,

Ming Ding,

Jie Tang

Bullets

Key Details

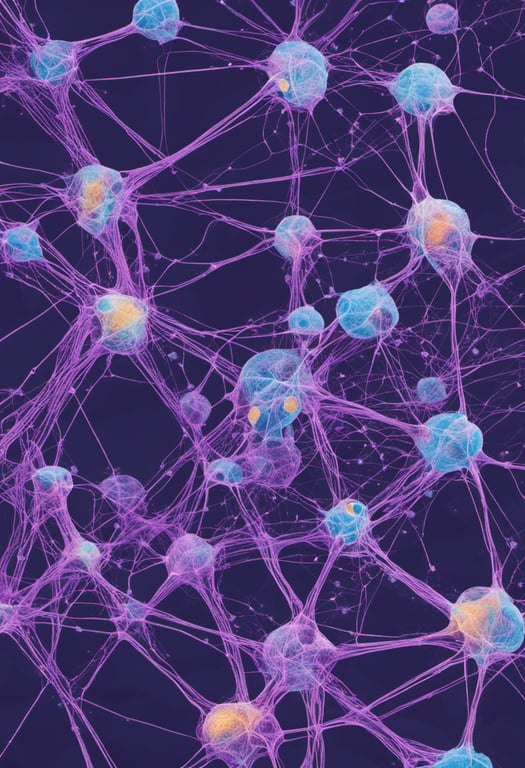

CogVLM adds trainable parameters in each layer of a pretrained language model to enable deep fusion with visual features

The method achieves SOTA on 10 classic vision-language benchmarks

CogVLM retains full capabilities on text, unlike joint training methods that impair language performance

The model and training code are open-sourced to facilitate vision-language research

Experiments validate CogVLM's versatility across vision-language tasks like captioning, VQA, and visual grounding

Explore the topics in this paper

AI generated summary

Visual language models with deep vision-text fusion

This paper introduces CogVLM, an open-source visual language model that achieves state-of-the-art performance by enabling deep fusion between visual and textual features. Unlike shallow alignment methods, CogVLM adapts the weights of a pretrained language model via trainable parameters in each layer. This allows the model to align visual and textual semantics without sacrificing language capabilities.

Answers from this paper

You might also like

Composing visual concepts in language models via communication

LLM-guided visual search

Illuminating Language Models: Unlocking Multimodal Understanding through Modularized Learning

Bridging open-source and commercial multimodal models

Joint Mixing for Multi-modal Language Models

Vision-language models misclassify unknown objects

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper