Efficient GNN training on disk

Published on:

8 May 2024

Primary Category:

Machine Learning

Paper Authors:

Renjie Liu,

Yichuan Wang,

Xiao Yan,

Zhenkun Cai,

Minjie Wang,

Haitian Jiang,

Bo Tang,

Jinyang Li

Bullets

Key Details

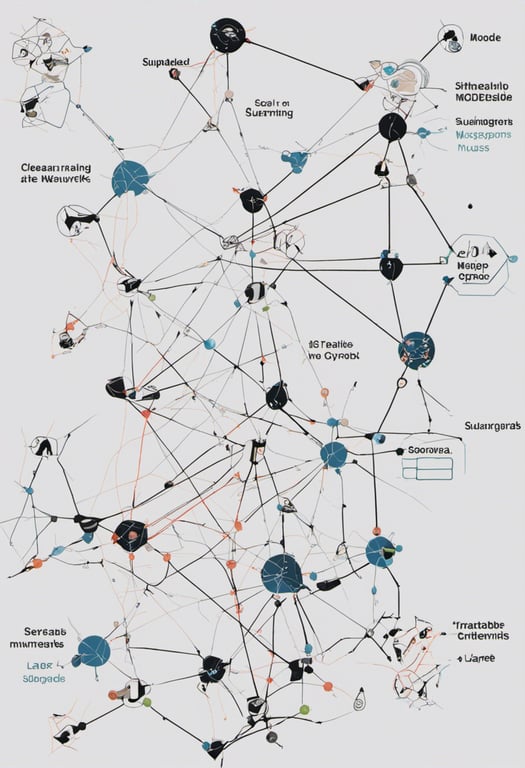

Decouples sampling & computation via offline sampling

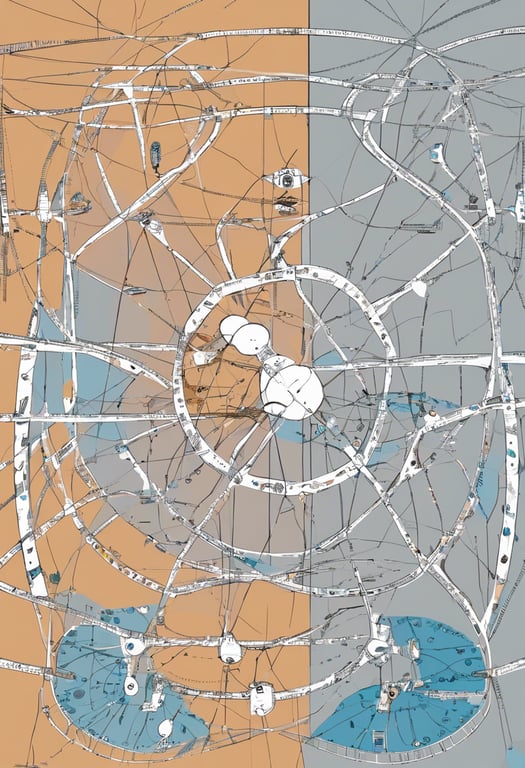

Optimizes data layout with four-level caching hierarchy

Avoids amplification via batched packing

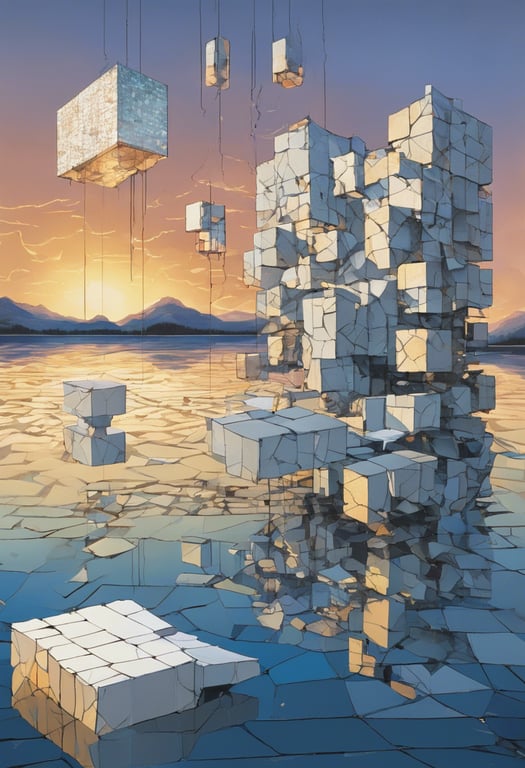

Overlaps disk I/O & computation with pipelining

Speeds up baselines by 8x, matches best accuracy

Explore the topics in this paper

AI generated summary

Efficient GNN training on disk

This paper introduces DiskGNN, a system to efficiently train graph neural networks on disk when graphs exceed CPU memory. DiskGNN achieves high efficiency and model accuracy through offline sampling to optimize data layout, four-level caching, batched packing, and pipelined training. Experiments show DiskGNN speeds up state-of-the-art systems by over 8x while matching accuracy.

Answers from this paper

You might also like

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper