Reliability of Large Language Models for Factual Knowledge

Published on:

15 October 2023

Primary Category:

Computation and Language

Paper Authors:

Weixuan Wang,

Barry Haddow,

Alexandra Birch,

Wei Peng

Bullets

Key Details

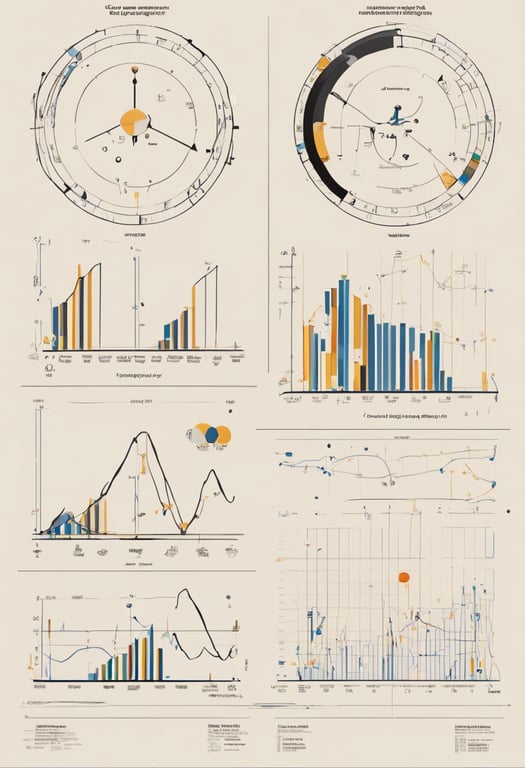

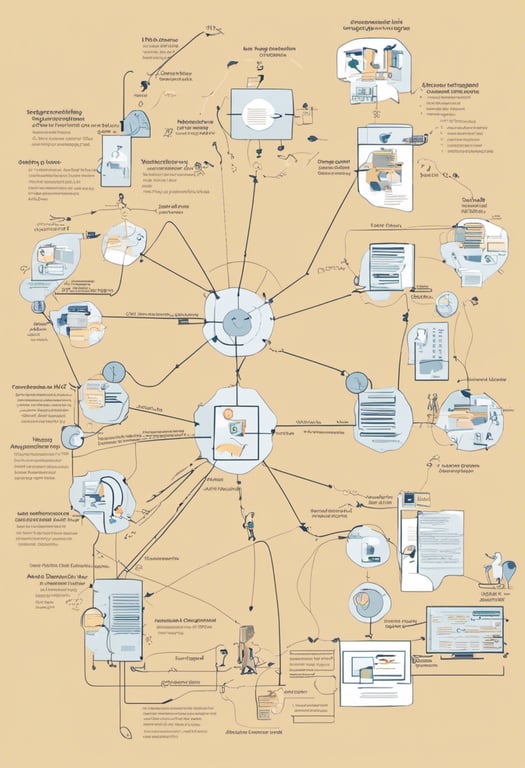

Proposes MONITOR metric to assess language model factual reliability

MONITOR measures deviation between distributions under different prompts

Correlates with accuracy but more nuanced and 3x faster to compute

Tested on 12 models with up to 30B parameters

Releases new 210k prompt test set FKTC

Explore the topics in this paper

AI generated summary

Reliability of Large Language Models for Factual Knowledge

This paper proposes a new metric, MONITOR, to evaluate how reliably large language models can produce factually correct answers. MONITOR measures the deviation between probability distributions when models are prompted in different ways. Experiments on 12 models show MONITOR correlates with accuracy while being more nuanced and 3x faster to compute.

Answers from this paper

You might also like

Assessing Language Models' Accuracy Across Languages

Quantifying Uncertainty in Language Model Explanations

Automated generation of incorrect options and feedback for math multiple-choice questions

Evaluating NLP Classification Performance

Predicting QA performance of large language models

Improving QA Systems with Feedback Loops

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper