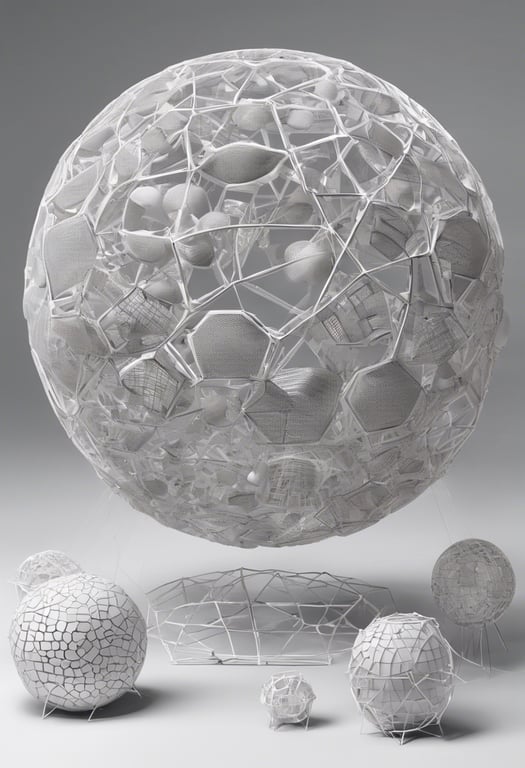

Using vision-language models to annotate 3D objects

Published on:

29 November 2023

Primary Category:

Computer Vision and Pattern Recognition

Paper Authors:

Rishabh Kabra,

Loic Matthey,

Alexander Lerchner,

Niloy J. Mitra

Bullets

Key Details

Introduces methods to aggregate vision-language model outputs across views/questions

Shows aggregations outperform standalone language models for annotation

Demonstrates annotating 3D objects for semantics and physics, zero-shot

Matches quality of human labels for object type/material on large dataset

Explore the topics in this paper

AI generated summary

Using vision-language models to annotate 3D objects

This paper explores how to leverage large vision-language models to annotate 3D objects with semantic, physical, and other properties in a zero-shot setting. It introduces methods to aggregate model outputs across different views and questions to produce robust annotations. Evaluations on a large 3D dataset show these annotations can approach human quality without additional model training.

Answers from this paper

You might also like

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper