Texturing 3D Models with Words

Published on:

3 April 2024

Primary Category:

Computer Vision and Pattern Recognition

Paper Authors:

Duygu Ceylan,

Valentin Deschaintre,

Thibault Groueix,

Rosalie Martin,

Chun-Hao Huang,

Romain Rouffet,

Vladimir Kim,

Gaëtan Lassagne

Bullets

Key Details

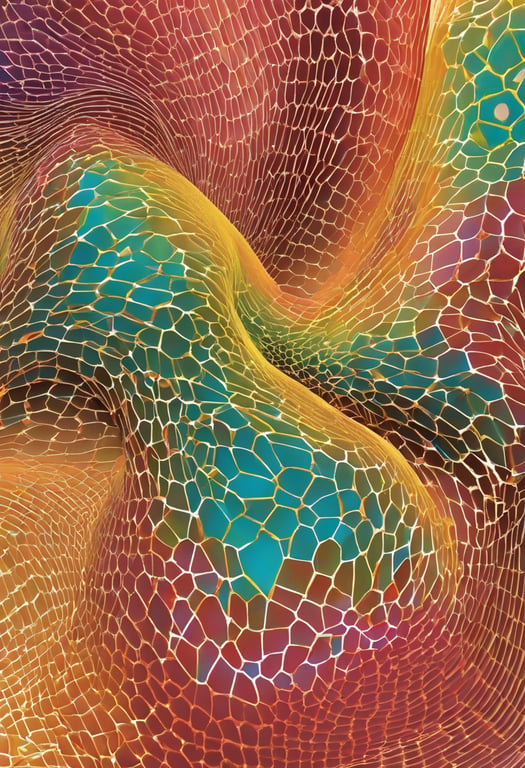

Leverages large scale text-to-image models to texture 3D assets

Uses grid pattern diffusion for consistent texturing across views

Refines textures over multiple passes to improve quality

Assigns retrieved parametric materials for relighting and editing

Significantly outperforms prior state-of-the-art texturing methods

Explore the topics in this paper

AI generated summary

Texturing 3D Models with Words

This paper presents a method to generate high quality, editable textures on 3D models using text prompts and large language models. It textures models by generating images from multiple views using a grid pattern diffusion strategy for consistency. The textures are refined over multiple passes and used to retrieve parametric materials, enabling relighting and editing.

Answers from this paper

You might also like

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper