Quantifying model generalization capability

Published on:

2 May 2024

Primary Category:

Machine Learning

Paper Authors:

Luciano Dyballa,

Evan Gerritz,

Steven W. Zucker

Bullets

Key Details

Proposes method to quantify model generalization ability using unseen data

Evaluates latent spaces of intermediate layers with cluster separation metrics

High accuracy on seen classes does not mean good generalization

Generalization ability varies across layers and architectures

Approach reveals intrinsic model properties useful for compression

Explore the topics in this paper

AI generated summary

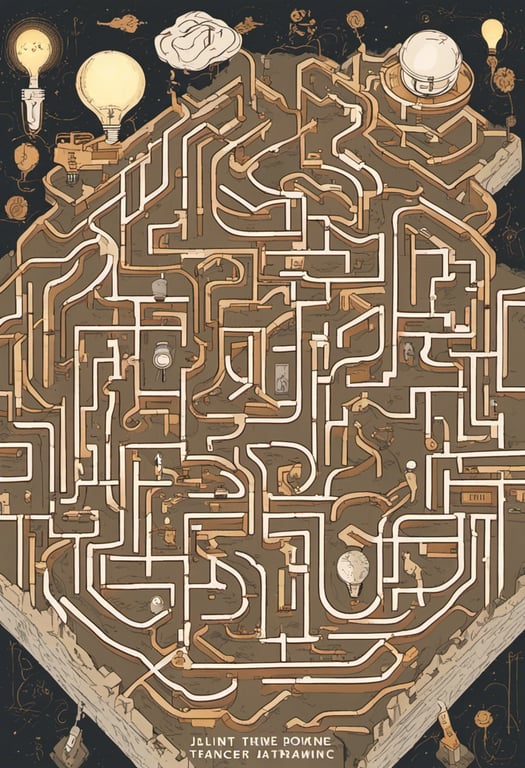

Quantifying model generalization capability

This paper introduces a method to evaluate how well deep learning image classifiers can generalize to related but unseen data. After training models on one dataset, their intermediate layers are tested on a different dataset from the same domain. A metric quantifies the degree to which unseen classes form separable clusters in the latent space. This reveals which layers have the most intrinsic generalization ability, with implications for model compression. Surprisingly, high accuracy on seen classes does not imply good generalization. The approach is validated across datasets and metrics.

Answers from this paper

You might also like

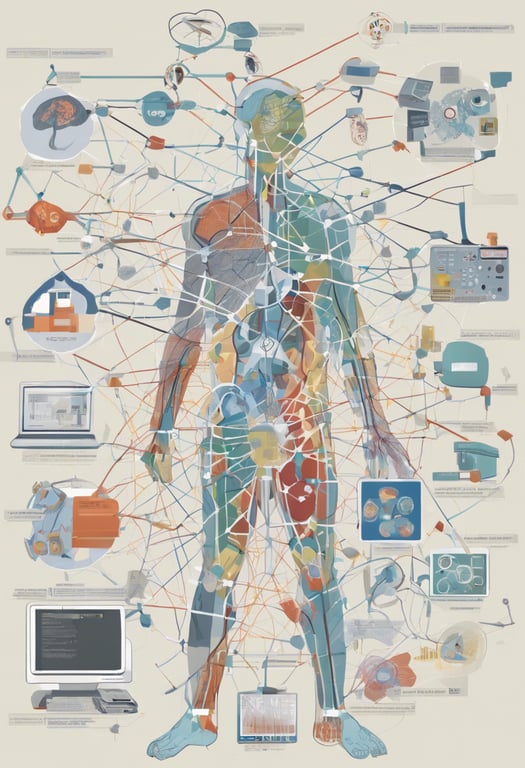

Learning hybrid feature representations for image classification

Assessing model performance on unseen data

Review of Deep Learning Generalization for Medical Imaging

Comparing images based on object identity

Using training dynamics to enhance compositional generalization

Validity of machine learning models using indirect labeling

Comments

No comments yet, be the first to start the conversation...

Sign up to comment on this paper